Jupiter has always been my favorite planet to look at (beside Earth…). It’s big, it spins fast, and it’s always dynamic. Even during my long (~16-year) break from astrophotography, I have not stopped looking at Jupiter through eyepieces.

This year, Jupiter was not particular high (up to ~35 degrees elevation) from my location so I did not have high hopes. Surprisingly, advancements in modern astro imaging technology, e.g., higher sensitivity lower noise cameras, atmospheric dispersion corrector, and better post-pressing software have helped overcome a good portion of this challenge.

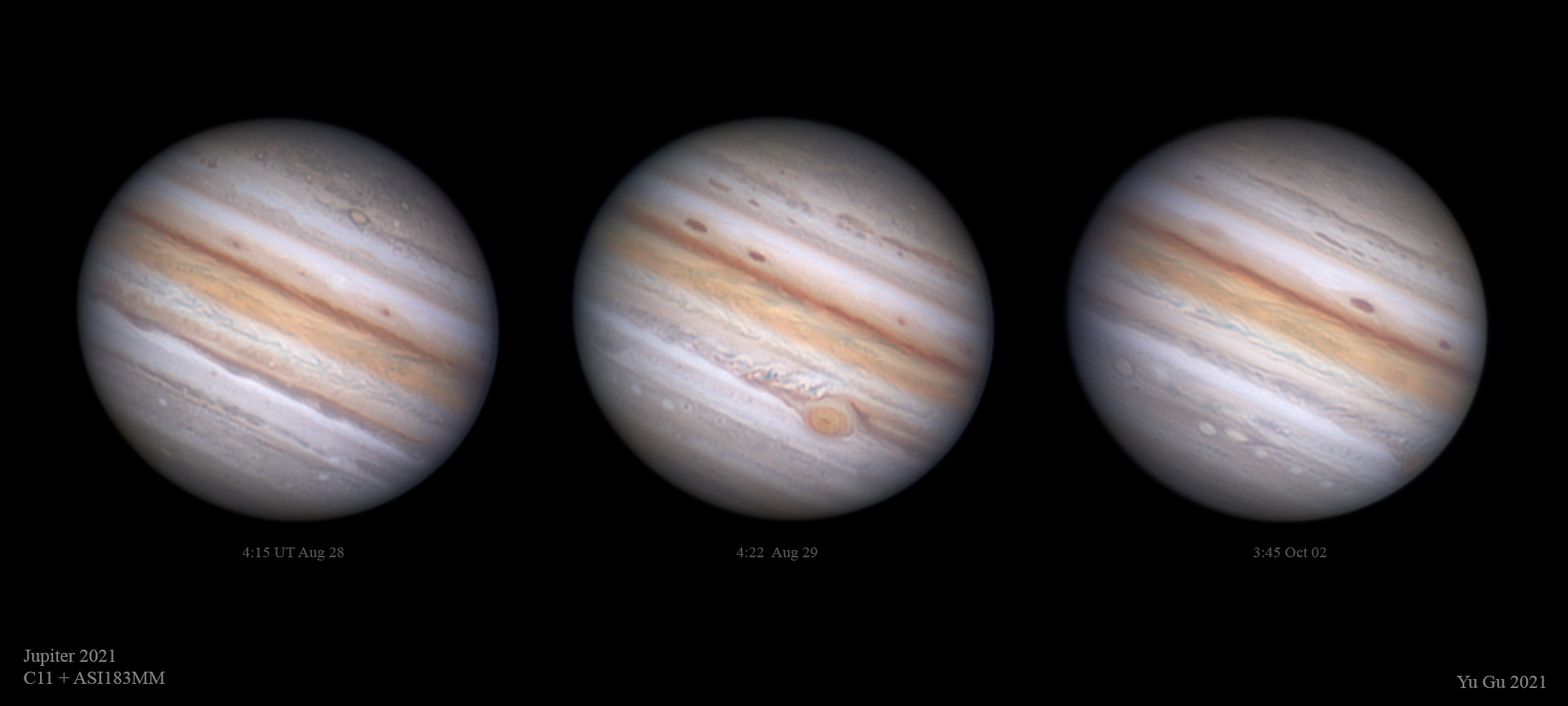

I started the season a bit late, but the first few nights turned out to be among the bests (click on the photos to enlarge).

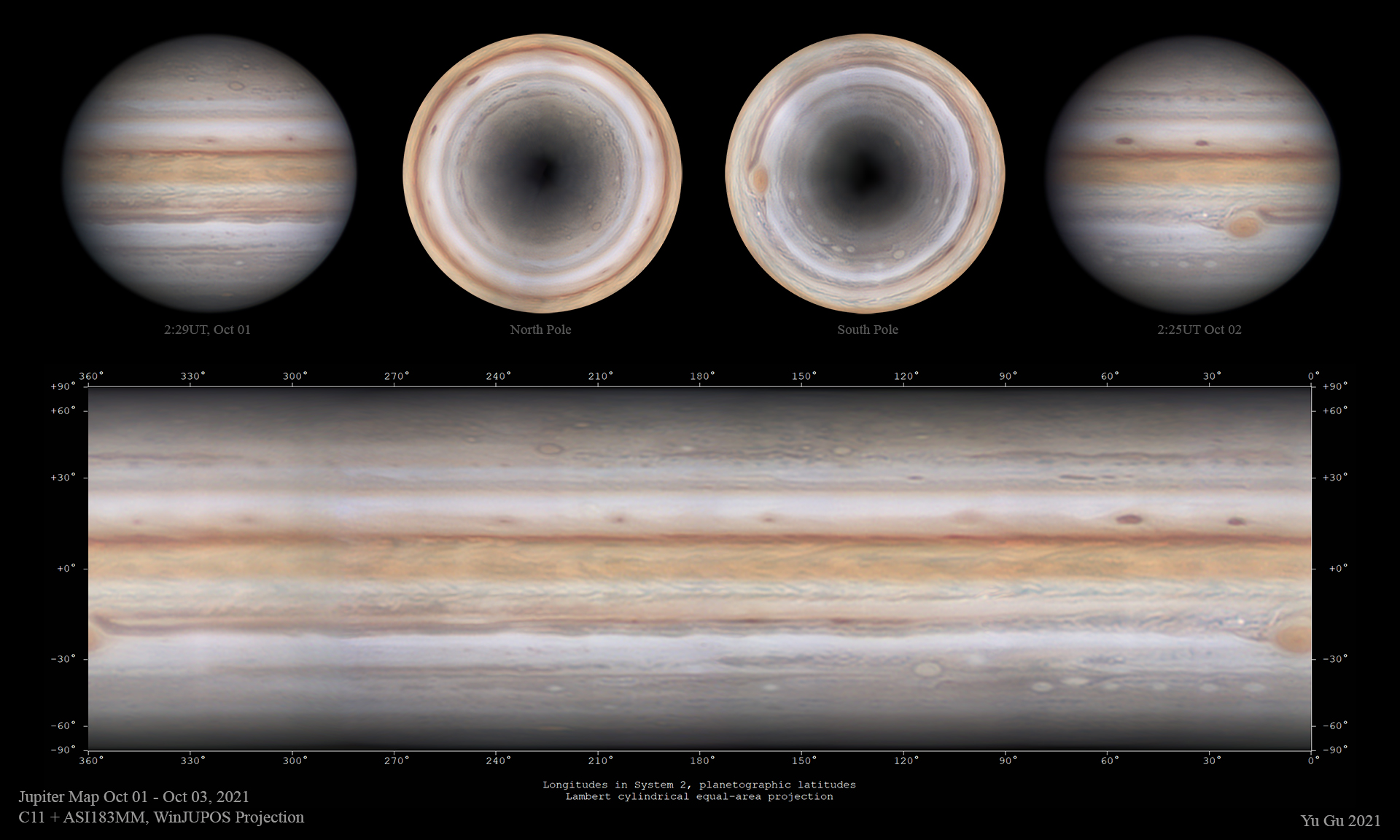

I was also able to create a Jupiter map, a first for me!

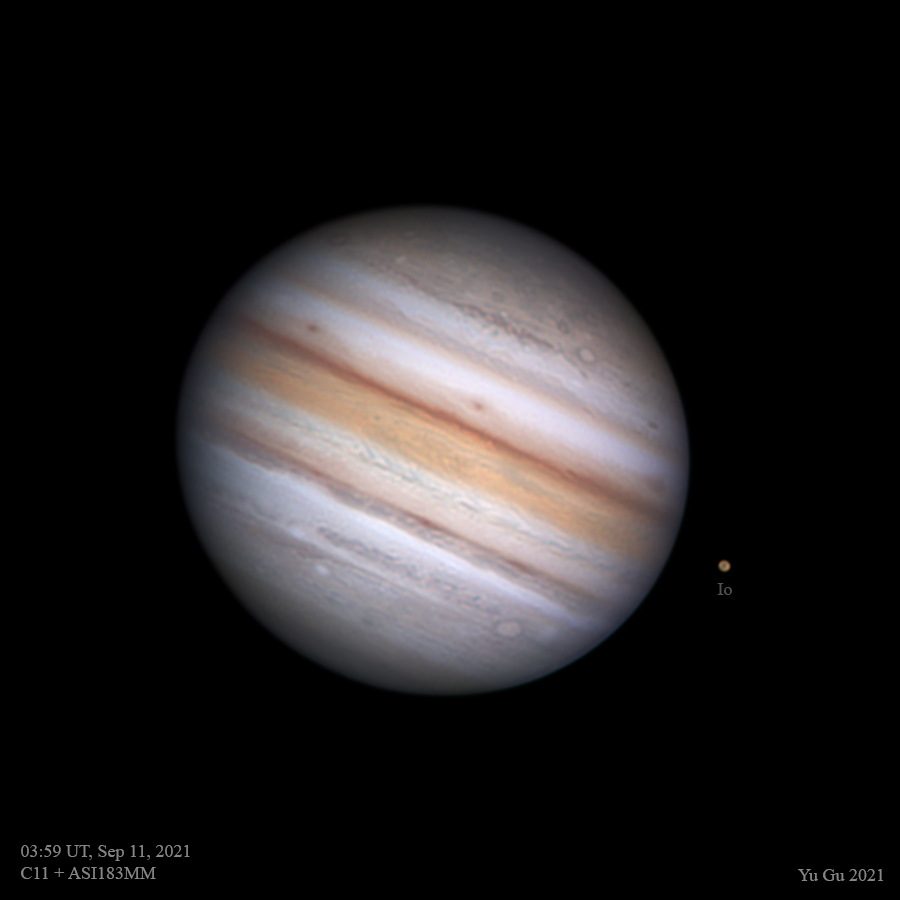

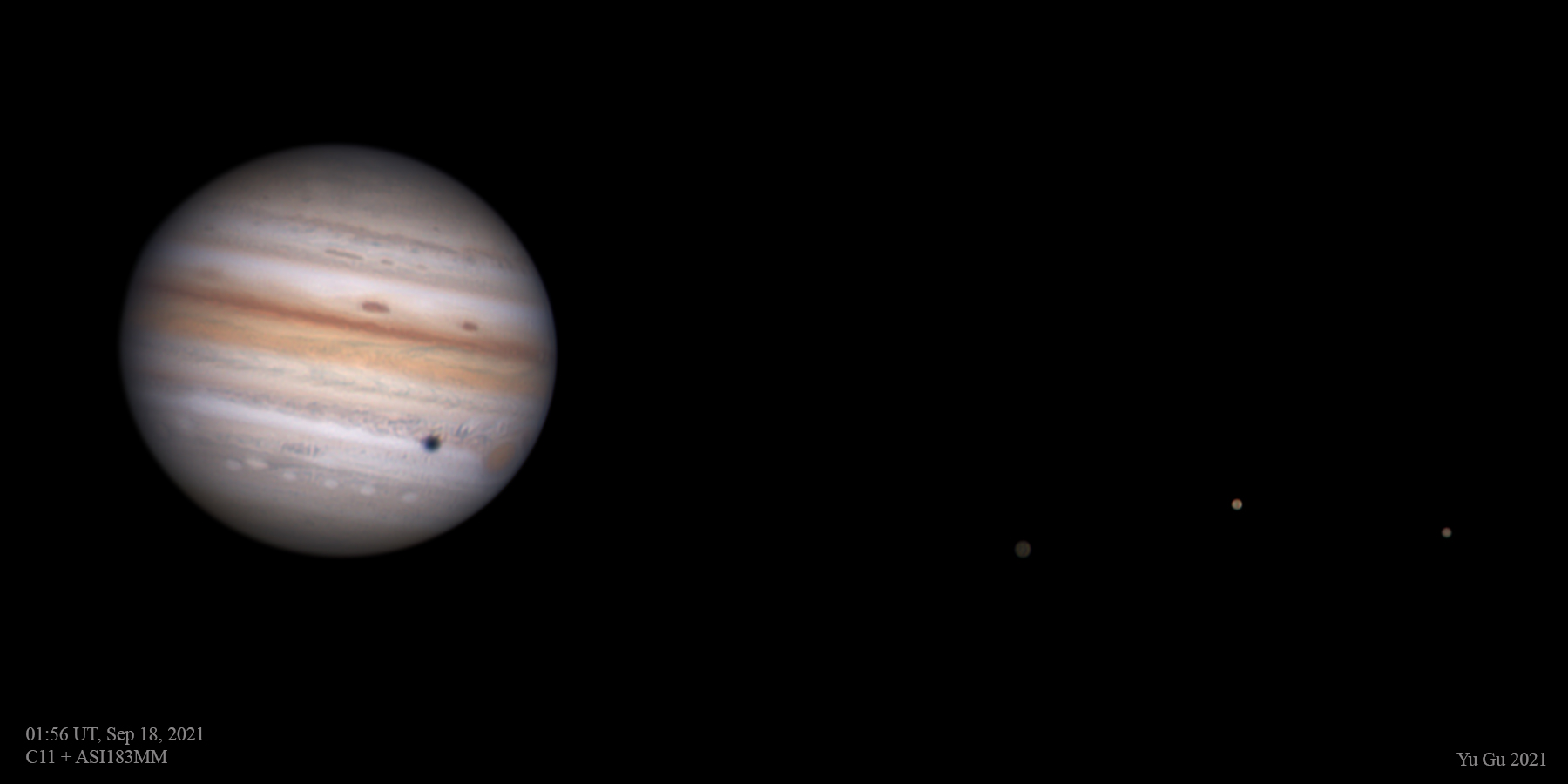

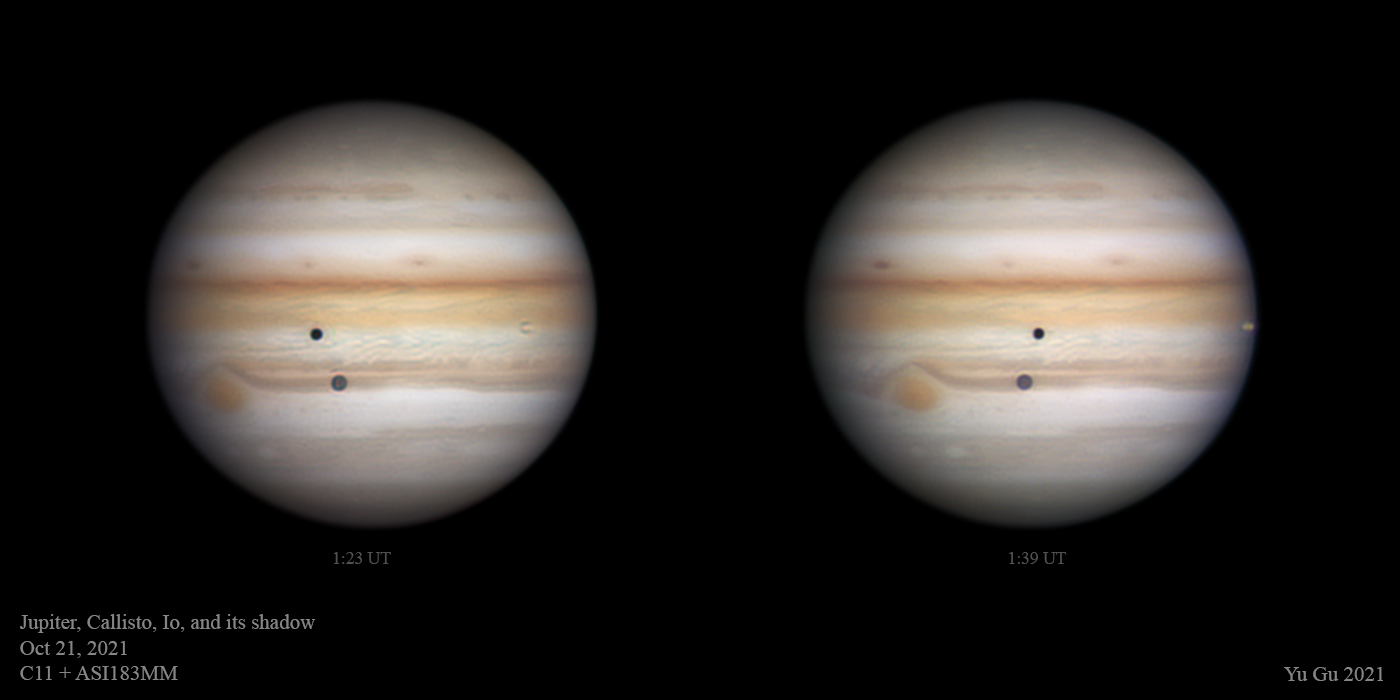

There have been interesting moon events throughout the season, here are just a few examples

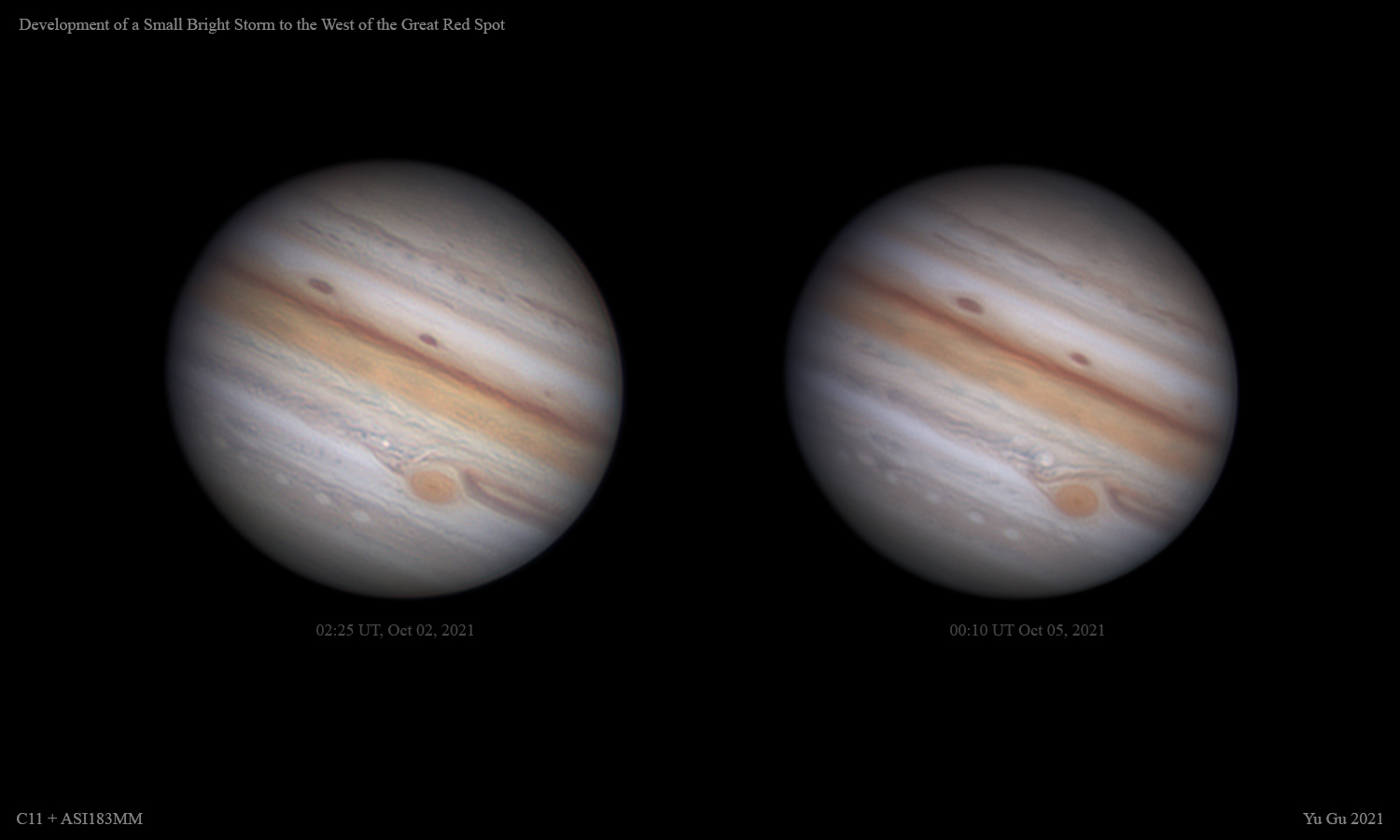

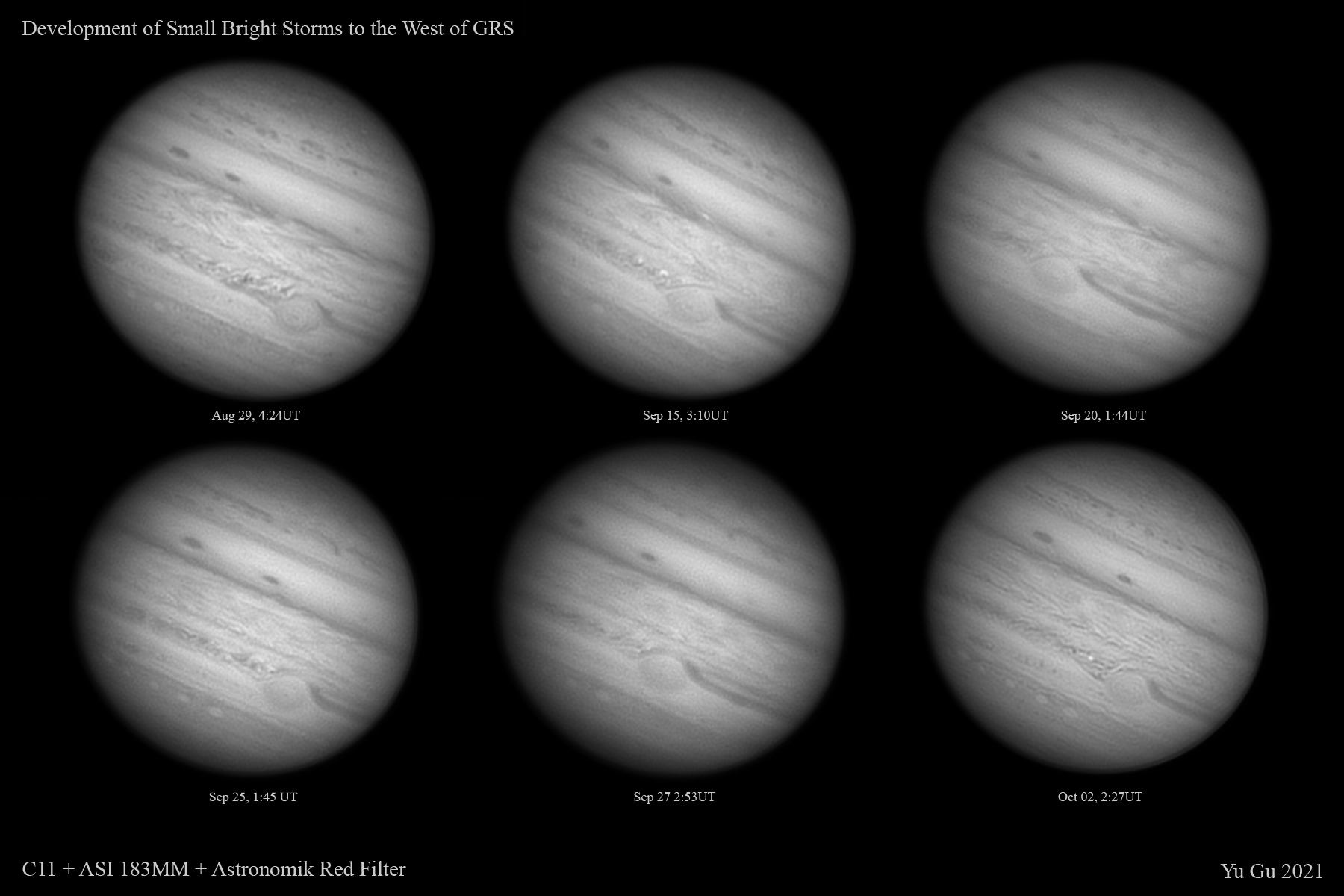

What’s most exciting, however, was the detection of a new eruption event near the Great Red Spot (GRS). It’s a very bright (like snow) region and I didn’t know what it was. Later I learned that it was a rising plume originated from the lower, warmer layer of Jupiter’s atmosphere. It carried ammonia and water vapor to the higher, colder layer and then froze, creating the high reflectivity. Here is an article that explains it.

It turned out that there were several of these outbreaks in 2021, two were captured earlier on Sep 15:

Finally, here is a Jupiter animation:

What looks interesting to me was what appeared to be very large-scale dark features in/near the north polar region. They look like darker shades that were rotating with Jupiter. Not sure what that could be…

Next year, Jupiter will rise a lot higher (for us northern observers)!