We have been working on the topic of robotic precision pollination for a few years now and will continue down this path in the foreseeable future. With the word “precision”, we mean treating crops as individual plants, recognizing their individual differences and needs, like how we would interact with people. If our robots can touch and precisely maneuver small and delicate flowers, they could be used to take care of plants in many different ways.

But why does anyone want to take over bees’ pollination job with robots? No, we don’t, and I much rather seeing bees flying in and out of flowers. What we like to have is a plan-B in case there is not enough bees or other insects to support our food production. With a growing human population, the rate of bee colony loss, and the climate change, this could become a real threat. We also want to be able to pollinate flowers in places where bees either do not like or cannot survive, such as confined indoor spaces (e.g., greenhouses, growth chambers, vertical agriculture settings, on a different planet, etc.).

In our previous project, we designed BrambleBee to pollinate Bramble (i.e., blackberry and raspberry) flowers. BrambleBee looks like a jumble sized bumblebee with a big arm, but cannot fly. We did not want to mimic bee’s flying ability; instead, we learned from bee’s micro hair structures and motions (thanks to our entomology team led by Dr. Yong-Lak Park) and used a custom-designed robotic hand to brush the flowers for precision pollen transfer.

BrambleBee served as a proof of concept and it was fun to watch it work, but there are still many challenges. For example, each flower is unique and there are many complex situations for a robot pollinator to handle (e.g., tightly clustered flowers, occlusion, deformable objects, plant motion, etc.). How to covert an experimental robot system to an effective agriculture machine and be accepted by growers is another major challenge. These are the research topics we will tackle with our next robot, StickBug.

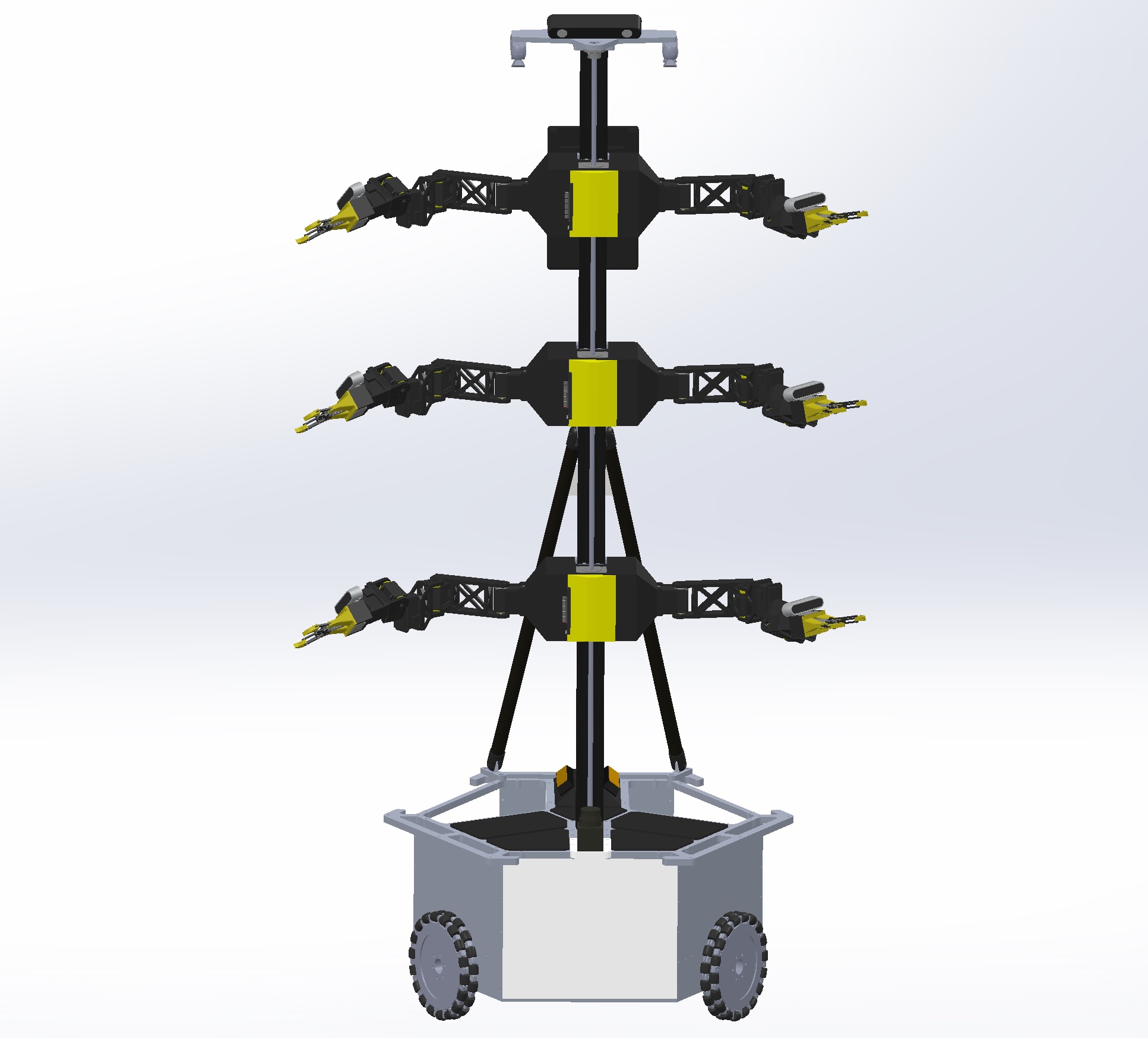

Wait,…, I should say “robots” because StickBug is not a single robot. It would be a multi-robot system with four agents (one mobile base and three two armed robots moving on a vertical lift).

We have a talented, motivated, and diverse team that includes horticulturists (Dr. Nicole Waterland and her students), human-systems experts (Dr. Boyi Hu and his students from the University of Florida), roboticists (Dr. Jason Gross and I, along with undergraduate and graduate students from #WVURobtics). This project will be open sourced, starting with sharing our proposal. If you have any suggestions on our approach or are interested in collaborating on the project, please feel free to contact us.

Attachment: NRI 2021 StickBug Proposal

Program: 2021 National Robotics Initiative (NRI) 3.0

NSF panel recommendation: Highly Competitive

Funding Agency: USDA/NIFA